Sonnet 3.7 vs Gemini 2.5 Pro: Real-World Coding Comparison in Cursor

Cursor IDE + AI Models: What You Need to Know Before Choosing

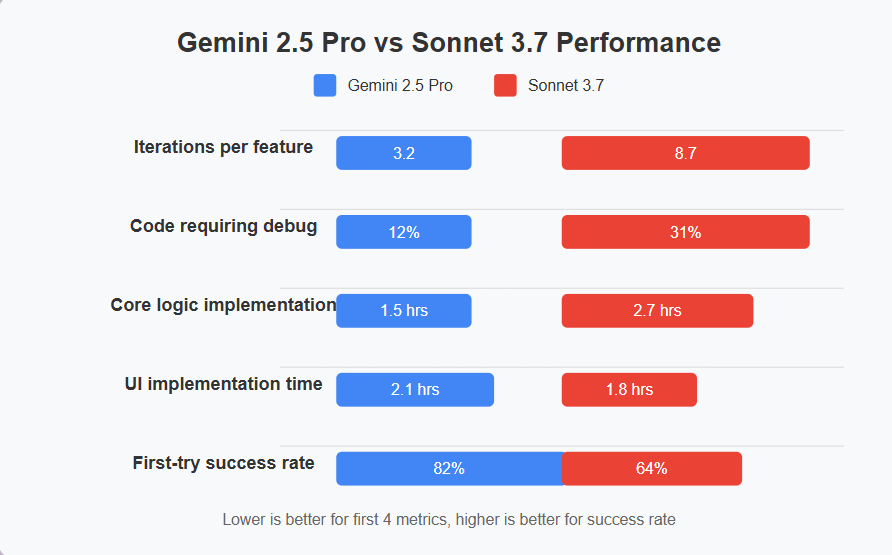

When working inside an AI coding environment like Cursor, subtle differences between large language models (LLMs) become glaringly important. We will explore how Sonnet 3.7 and Gemini 2.5 Pro perform in a real-world test: building a multiplayer AI-powered guessing game.

The right model choice can dramatically reduce development time and impact code quality. Our comprehensive comparison will help you make an informed decision based on hands-on testing.

Sonnet 3.7 VS Gemini 2.5 Pro | Cursor Vibe Coding

See how Sonnet 3.7 and Gemini 2.5 Pro stack up inside Cursor in this side-by-side walkthrough.

If you're just starting with Cursor, you may also want to check out our step-by-step Cursor AI guide to get familiar with the environment.

Test Scenario Overview: Building a Game

The test project was inspired by the game of "AI Telephone," where players submit prompts, AI generates images, and others guess the original prompts.

File Setup

- main.py: ~1000 lines of Python logic implementing game mechanics and scoring algorithms

- index.html: ~800 lines of frontend code (HTML, CSS, and vanilla JavaScript)

All logic was kept in two files to simplify how the models processed and managed the project.

Game Requirements

- 3-player minimum with lobby system

- Prompt submission and MidJourney-based image generation

- Scoring based on semantic similarity

- Real-time feedback and scoreboard

- Mobile-responsive interface

This setup was chosen to test both models' capabilities in handling game mechanics, UI, and backend logic without complex file structures.

Code Comparison: Real Examples

The differences become clearer when examining actual code. Here's how each model approached the game's scoring system:

Gemini 2.5 Pro's Approach:

Sonnet 3.7's Approach:

Gemini's code is focused and functional, while Sonnet adds unrequested features like difficulty levels and analytics.

Hallucination Examples

Sonnet occasionally "hallucinated" components that didn't exist. For example, when implementing the player lobby, it generated code referencing a non-existent UserProfileManager class that it believed had been created earlier. This caused errors requiring debug time to identify the phantom dependency.

Gemini rarely exhibited these issues, maintaining consistent awareness of the project's actual state.

Developer Experience

Working with Gemini felt like collaborating with a focused senior developer who prioritized efficiency. The development process was predictable, with code that worked after minimal iterations. Direct instructions yielded expected results without elaborate context-setting.

Sonnet felt more like partnering with a creative designer-developer who constantly suggested improvements. This led to more engaging UI elements but required more management to keep the project on track.

Strategic Use in AI Workflows

Knowing when to switch between models is part of an effective AI-enhanced coding process:

- Use Gemini early for fast results when:

- Building core functionality

- Working against tight deadlines

- Implementing complex algorithms

- Debugging critical issues

- Switch to Sonnet when refining front-end aesthetics and:

- Enhancing user experience

- Adding creative visual elements

- Creating documentation

- Developing onboarding flows

Switching Models Mid-Project

Cursor's flexibility allows switching models as needed, even mid-project. Here's how:

- Click the model selector in the top right corner

- Choose between Sonnet 3.7 and Gemini 2.5 Pro

- Continue your work with the new model

The change takes effect immediately with no project reloading required. The new model will have access to open files and recent chat history.

See how in our Cursor for Beginners guide— we cover modes, best prompting styles, and troubleshooting tips.

Best AI Model for Front-End vs Back-End Development

Gemini 2.5 Pro outperforms Sonnet 3.7 in terms of reliability, speed, and alignment to instructions. It's the right choice for developers who want results quickly without micromanaging output.

Sonnet 3.7 is a strong follow-up if UI design, animations, or polish are important — just expect to spend more time cleaning up the logic.

For teams, the ideal approach might be using Gemini for back-end development and core functionality, while leveraging Sonnet for front-end refinement.

Common Questions:

Can I use both models in the same project?

Yes, Cursor allows switching between models even mid-project.

Which model is better for beginners?

Gemini 2.5 Pro tends to be more beginner-friendly as it produces more predictable code that requires less debugging.

Do these models perform better with specific programming languages?

Both handle Python, JavaScript, HTML, and CSS extremely well. For specialized languages like Rust or Go, Gemini showed slightly better accuracy.

Related Resouces

Cursor vs Windsurf: Which AI Coding IDE is Best? — A deeper dive into the platforms these models run on.

Beginner's Guide to Cursor AI — Understand modes, models, and how to prompt effectively.

Model Selection Strategy for Efficient Dev Cycles

If you're coding within Cursor, choosing the right LLM for each phase of development can significantly impact velocity and output quality:

- Deploy faster with Gemini — optimized for code generation and throughput.

- Refine with Sonnet — ideal for cleanup, formatting, and structural edits.

Strategically toggling between models helps you maintain flow state and reduce iteration overhead—especially when building dynamic applications like AI-driven games or tools.

Want a broader platform comparison? Cursor vs Windsurf offers a detailed look at IDE-level trade-offs, agent handling, and developer UX.

Need Custom AI Stack Optimization?

If you're integrating LLMs, coding agents, or multi-model workflows into your architecture, we offer hands-on AI engineering support. From prompt tuning to IDE integration and deployment strategy—we help you align tooling with your build process.

Connect with our technical team to assess your current setup and identify bottlenecks.Book a strategy session to map out a stack that supports rapid iteration and scalable delivery.